User guide

This guide will help you navigate the platform’s LLM Tools section to use pre-trained open source models for inference. Follow these steps to select a model, input your query data, and understand the results.

Steps to Perform Inference

1. Navigate to the "LLM Tools" > “Open Source Inferencing” Section

- Access the platform’s main navigation menu.

- Click on LLM Tools to expand the tools submenu.

- Select Open Source Inferencing from the list.

- This section contains a curated collection of pre-trained models available for immediate use.

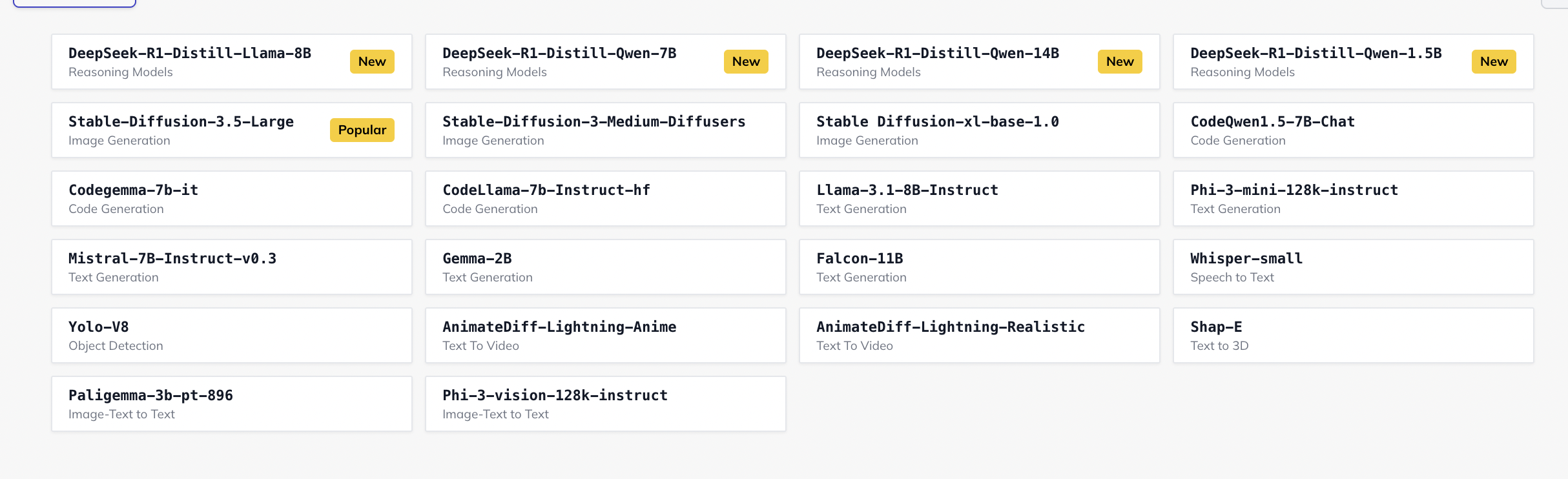

2. Browse Available Pre-Trained Models

- The platform will present a gallery or list of models, each specialized for different use cases such as text generation, classification, summarization, or question answering.

- Take note of important details such as model size, architecture, supported tasks, and any additional notes provided.

info

you can also Filter and Search required models.

3. Select a Model That Aligns with Your Needs

- Review the brief descriptions and intended applications of each model.

- Choose the model best suited to your specific task or project requirements.

- The selection might be based on factors like performance metrics, supported input/output formats, or compatibility with your data.

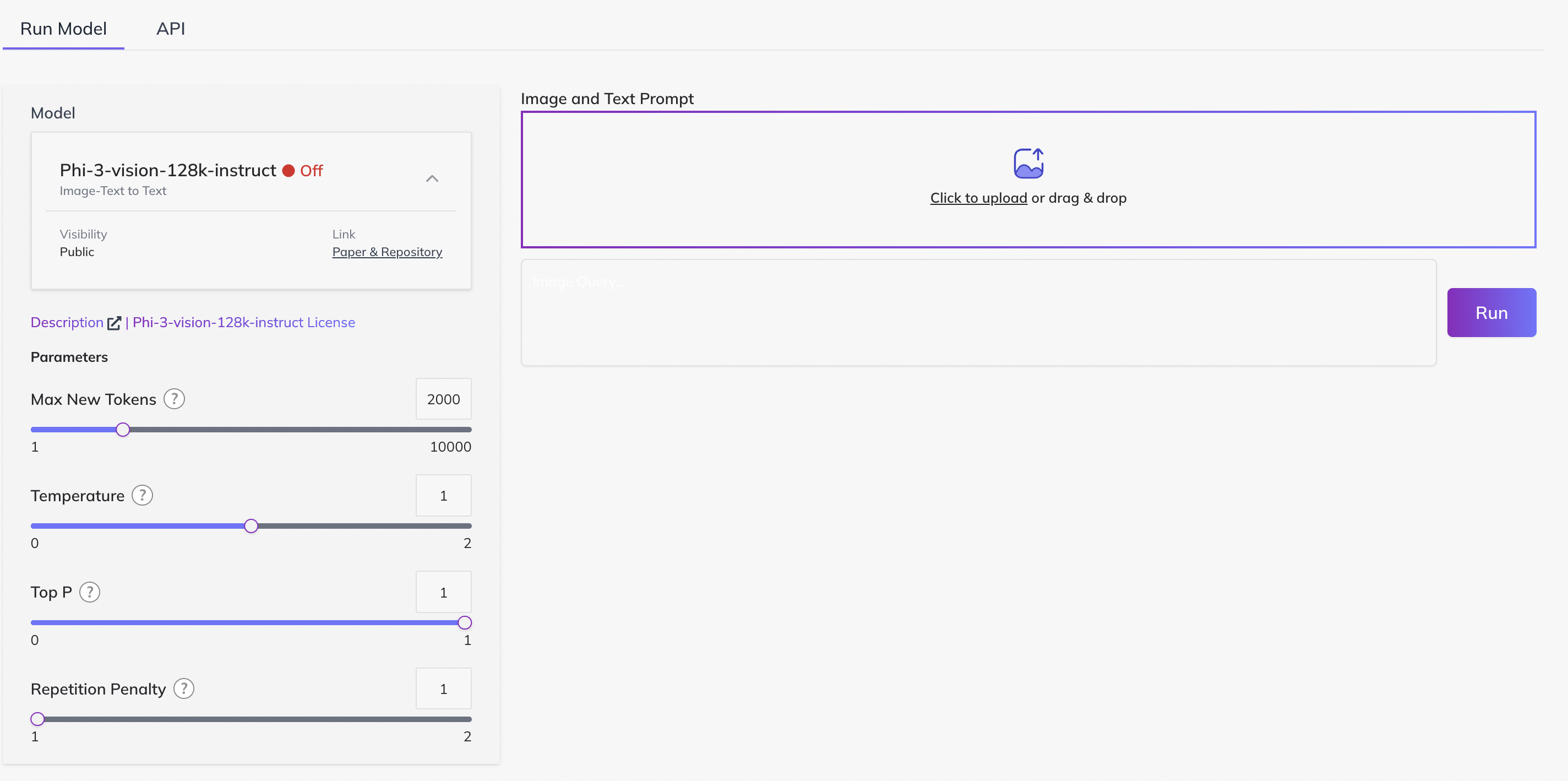

4. Access Model Details and Inference Interface

- Click on the chosen model to open its dedicated page.

- Here you will find:

- Detailed information about the model architecture and capabilities.

- Usage instructions.

- Access to the inference interface where you can input your data.

5. Prepare Your Query Data in the Required Format

- Refer to the model’s documentation to understand the expected input format.

- Common input formats might include plain text, JSON, CSV, or other structured forms.

- Ensure your query data complies with any constraints such as token limits or required fields.

6. Input Your Query Data into the Inference Interface

-

You can either paste your query directly into the provided input field or upload a file if supported.

-

Double-check that the data matches the required format to avoid errors during inference.

- we are also providing

APIsection to save response locally.

export API_KEY=<PASTE_API_KEY_HERE>

curl -X POST "https://platform.qubrid.com/api/model/Phi-3-vision-128k-instruct" \

-H "Authorization:Bearer $API_KEY" \

-H "Content-Type: application/json" \

--form 'file=@"mango.jpeg"' \

--form 'prompt="what this image says"' - we are also providing

7. Execute the Model by Clicking the "Run" Button

- Once your query data is ready and entered, click the Run button.

- The platform will process your input through the selected model.

- Processing time may vary based on the model size and complexity of your query.

Understanding the Inference Output

What to Expect After Running the Model

- The output generated will depend on the model you selected and the nature of the input.

- Examples of output types include:

- Text generation models: generated text continuations, summaries, or translations.

- Classification models: predicted labels or categories for your input.

- Extraction models: specific information extracted from input text.

- The format of the output may be plain text, JSON, or structured data depending on the model design.

Interpreting Results

- Review the output carefully relative to your query.

- Some models provide confidence scores or probabilities indicating prediction certainty.

- Check if the output requires any post-processing (e.g., formatting, filtering) before use in your workflow.

Troubleshooting and Tips

- If results are confusing or seem incorrect, verify that:

- Your input format strictly adheres to the model’s requirements.

- The selected model is appropriate for your task.

- Consult the model documentation for details on limitations and recommended best practices.

- Consider running several test queries to understand model behavior and output style.

Summary

By following these steps, you can effectively use the platform’s open source inferencing models to analyze your data or generate predictions. Make sure to:

- Choose the model that best fits your application.

- Prepare and format your input carefully.

- Review the output critically to extract meaningful insights.

If you have further questions or need assistance with specific models, feel free to ask!

- Join our

Discord Groupto connect with the community and share your feedback directly or email us atdigital@qubrid.com.