OpenAI’s Game-Changing Open GPT Model - Deploy GPT-OSS on Qubrid AI GPUs

Deploy GPT-OSS on Qubrid AI GPUs

Written by the Marketing Team at Qubrid AI. If a marketer can deploy GPT-OSS this easily, so can you!

How to login and navigate Qubrid AI

The AI industry is evolving at lightning speed. Every month, we see breakthroughs in large language models (LLMs), generative AI, and machine learning research. But the latest release from OpenAI has created a true inflection point: GPT-OSS.

For the first time since GPT-2, OpenAI has released an open-weight GPT-style model that anyone can download, run locally, fine-tune, and extend into production systems.

- GPT-OSS is available in two sizes:

- GPT-OSS 20B → ~21B parameters, lightweight enough to run on high-end GPUs (16–24 GB VRAM).

- GPT-OSS 120B → ~117B parameters, designed for enterprise-class GPUs like A100s and H100s.

This is huge. Developers now have an Apache-licensed GPT model that offers strong instruction following, reasoning capabilities, and tool use — without vendor lock-in.

But there’s a catch: running GPT-OSS requires serious GPU horsepower and a properly configured AI/ML environment. That’s where Qubrid AI makes the difference.

The Problem: AI/ML Setup Still Wastes Time:

Before you can even start experimenting with GPT-OSS, you need to:

- Install and align PyTorch/TensorFlow with the right CUDA/cuDNN versions

- Configure inference frameworks like Ollama, vLLM, or llama.cpp

- Manage dependencies for fine-tuning and structured outputs

- Scale from a single GPU → multi-GPU clusters without breaking everything

This process can take hours — even days. For teams racing to experiment, prototype, or launch products, that’s a serious bottleneck.

The Qubrid AI Solution: AI/ML Packages on GPU Virtual Machines

At Qubrid AI, we’ve solved this problem by creating ready-to-use AI/ML packages, optimized for GPU acceleration and available for instant deployment on our GPU-powered virtual machines.

-

With Qubrid AI, you get:

- Preinstalled environments → PyTorch, TensorFlow, RAPIDS, CUDA

- Optimized stacks → for training, inference, and fine-tuning

- Faster time-to-value → deploy in minutes, not hours

- Scalability → move from one GPU VM to multi-GPU clusters seamlessly

infoInstead of wrestling with drivers, compilers, and dependencies, you can focus on what really matters: experimenting with GPT-OSS and building AI applications.

Why GPT-OSS + Qubrid AI is a Perfect Match

Running GPT-OSS locally or in the cloud is

resource-intensive.- GPT-OSS 20B can run on high-end GPUs (like NVIDIA A100 40GB or RTX 6000 ADA).

- GPT-OSS 120B requires enterprise-grade infrastructure (H100s with 80GB VRAM).

Qubrid AI provides exactly that infrastructure.

With our GPU-ready environments, you can:

- Spin up

GPT-OSS 20B with Open WebUIin just a few clicks - Run experiments with

Ollamaintegration - Fine-tune

GPT-OSSon your own datasets - Deploy at scale without reconfiguring your environment

In short: Qubrid AI is the fastest way to explore GPT-OSS at scale.

Step-by-Step: How to Deploy GPT-OSS 20B on Qubrid AI

Ready to try it yourself? Follow these simple steps:

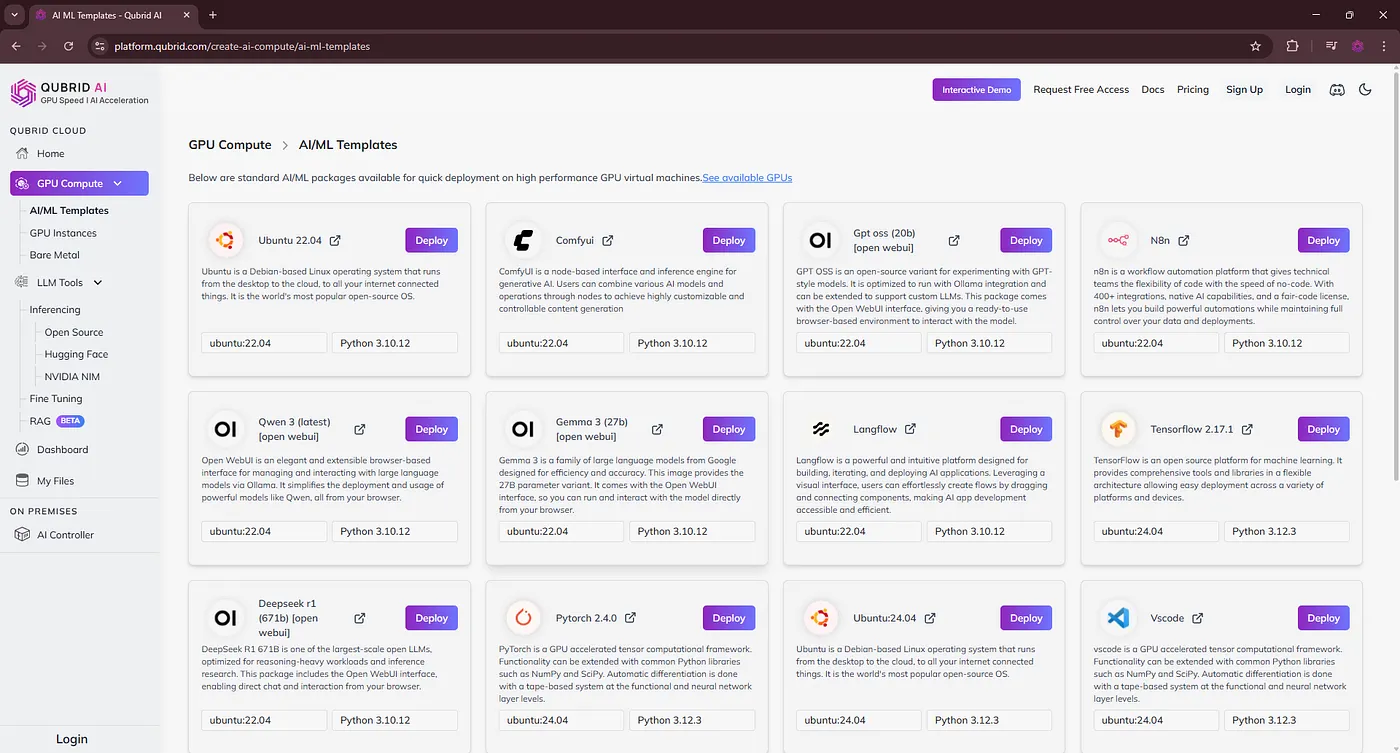

Go to the Qubrid Platform → Head over to AI/ML Templates under the GPU Compute section.

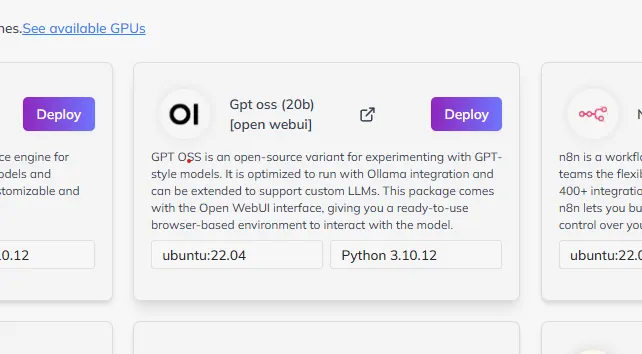

Find GPT-OSS (20B) [Open WebUI] → currently, Qubrid AI supports the 20B model with a browser-ready interface.

Click Deploy → begin configuring your VM.

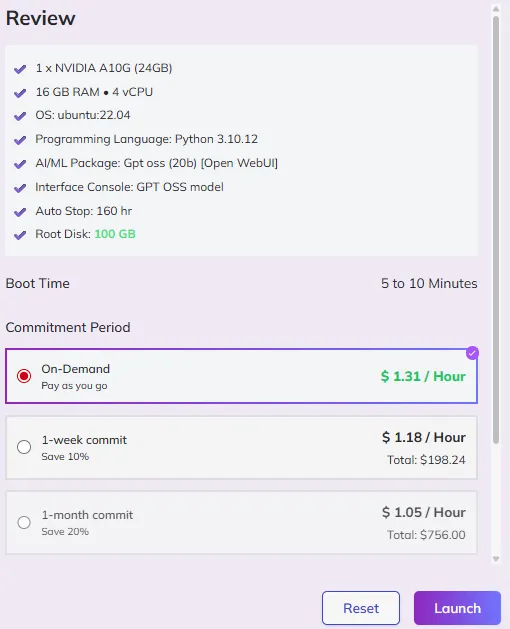

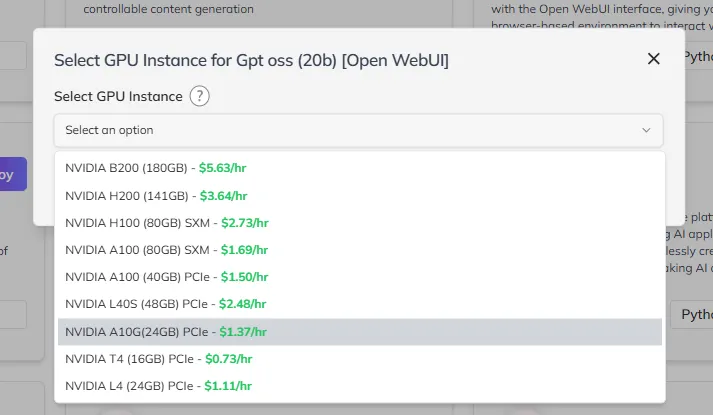

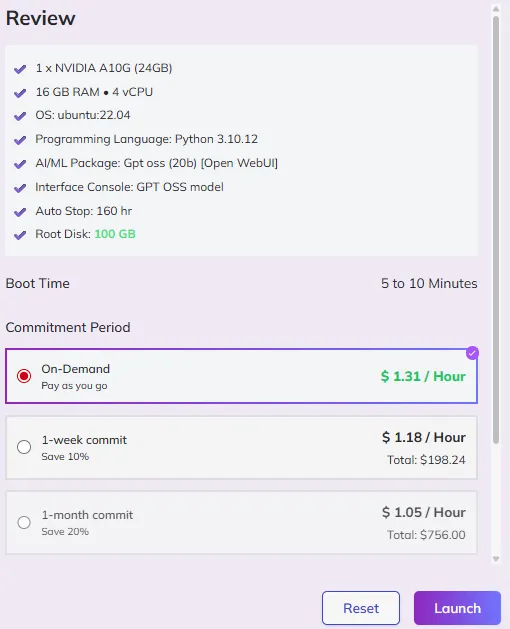

Choose your GPU → select the right GPU type (A100, H100, or other available instances).

Make sure to login and add credits before selecting the GPU

Select GPU Count & Root Disk → allocate resources depending on your workload.

GPU Count can be set from 1 to 8, with Root Disk options from 100 GB to 2000 GB

Enable SSH (Optional) → toggle the option, provide your public key, and gain full SSH access.

Follow these steps to generate the SSH Key.

Set Autostop (Optional) → configure the VM to automatically stop after a chosen period to save costs.

This is suggested to ensure your credits aren’t wasted in case you forget to turn off the instance.

Click Launch, and you’re done! 🎉

Make sure you choose your period carefully, commitments fetch lower prices

In under 5 — 10 minutes, you’ll have GPT-OSS 20B running with Open WebUI, ready to chat, test prompts, or fine-tune.

Example Use Cases

- Here’s what you can build with

GPT-OSS + Qubrid AI:- Researchers & Developers → Fine-tune GPT-OSS for healthcare, finance, or legal datasets.

- AI Startups → Rapidly prototype LLM-powered applications without weeks of infra setup.

- Enterprises → Deploy secure, internal AI assistants powered by GPT-OSS on private GPU VMs.

- Educators & Communities → Provide students and teams with access to GPT-OSS in workshops or hackathons.

DIY Setup vs Qubrid AI Deployment

- 8–12 hours of environment setup (drivers, CUDA, configs)

- Hard to source enterprise GPUs locally

- Manual cluster setup required

- Pay for idle hardware

- High friction, error-prone experimentation

Qubrid AI Deployment

- Up and running in under 5 minutes

- On-demand access to A100s & H100s

- One-click multi-GPU scaling

- Pay-as-you-go with autostop option

- Smooth, browser-ready Open WebUI

The difference is clear: Qubrid AI lets you skip the friction and get straight to innovation.

Why Qubrid AI is the Right Platform for GPT-OSS

- Performance → Enterprise-grade GPUs tuned for AI workloads.

- Speed → GPT-OSS up and running in minutes.

- Scalability → Start small, grow into distributed clusters effortlessly.

- Flexibility → Use prebuilt stacks or bring your own AI workflows.

With GPT-OSS + Qubrid AI, you’re not just experimenting — you’re building production-ready AI.

What’s Next

We’re just getting started. At Qubrid AI, we’re expanding our AI/ML templates to include:

- Pre-tuned GPT-OSS models for industry-specific domains

- Seamless integration with LangChain, LlamaIndex, and RAG pipelines

- One-click deployments for fine-tuning GPT-OSS on private datasets

Our vision: make open-source LLMs + GPU cloud infrastructure accessible to everyone.

Our take on this?

AI is moving from closed APIs to open, customizable models. GPT-OSS is a major milestone in that journey.

At Qubrid AI, we provide the infrastructure to deploy GPT-OSS quickly, run it at scale, and fine-tune it for your unique needs. Whether you’re a researcher, a startup founder, or an enterprise team, Qubrid AI helps you move from idea → prototype → production faster.

Deploy GPT-OSS 20B on Qubrid AI GPU VMs today and start building the next generation of AI applications.